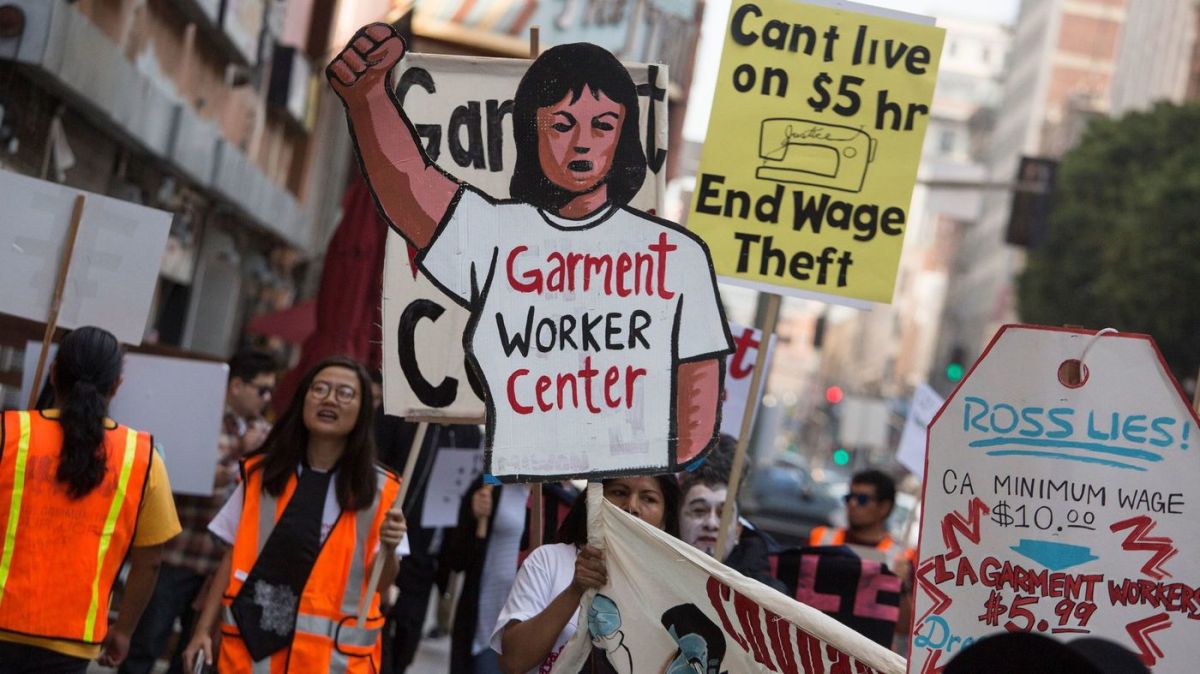

There is a saying that when something is punishable by a fine, it really means “legal, for a price”. Rogue UK employers seem to take this to heart. A shocking investigation by the Bureau of Investigative Journalism published this month shows the penalty system has barely been enforced: out of more than 4,800 firms fined since […]

I joined IIPP Prof Dr. Cecilia Rikap to to discuss Dr. Plixavra Vogiatzoglou‘s book on Mass Data Surveillance & Predictive Policing. 📺 Watch the event recording here: https://lnkd.in/epx_DUa8

Originally published for Jacobin. Ever since Robert Brenner published his essay “The Economics of Global Turbulence” in 1998, there has been a wide-ranging debate about his understanding of the period since the 1970s as a “long downturn.” Seth Ackerman and Aaron Benanav have recently been extending this debate in Jacobin. Some of the claims that Benanav puts forward in his reply to Ackerman, largely […]

Executive director of New York Taxi Workers Alliance Bhairavi Desai speaks as Uber drivers participate in a rally outside of the Uber headquarters on January 5, 2023 in New York City. (Michael M. Santiago / Getty Images) Originally published by Jacobin. There are as many ways to steal wages as there are to pay them. […]

The original post on Brave New Europe. The gig economy is often talked about as ‘the future of work’, but if we look at history we find that its wage model – paying per output, rather than per hour – actually goes back hundreds of years. In the 19th century, this was called ‘piece wages’, […]

Originally published in Jacobin With the support of the GMB union, British workers at Amazon’s Coventry fulfilment center have turned a wildcat strike into a fight for a collective bargaining agreement. On a mid-April morning, hundreds of workers from Amazon’s Browns Lane fulfillment center in Coventry, England, formed a picket outside of the warehouse before […]

Technological change is often be viewed as an exogenous force, a deus ex machina “outside the domain of economic theory” (Schumpeter 1911:11), or an endogenous force, subordinate to the institutional régulation of capitalism (Gentili et al. 2020; Montalban et al. 2019; Spencer 2017). Drawing on neo-Schumpeterian and régulation theory, this paper sublates these antithetical positions to form an alternative approach that re-examines the […]

Cloudwork is absorbing an increasing proportion of the world’s labour and has been significantly boosted by the COVID-19 pandemic (ILO, 2021). We use cloudwork to refer to remotely performed labour mediated by digital labour platforms – companies that connect workers with clients through a digital interface, exert control over and extract value through the labour process […]

On the Trade Union Congress’ (TUC, 2019) 15th annual ‘Work Your Proper Hours Day’, TUC General Secretary Frances O’Grady asserted, ‘It’s not okay for bosses to steal their workers’ time’, pointing to the chronic theft of workers’ time and money in the United Kingdom (UK). Over five million UK workers laboured a total of two […]

The Wage Theft Epidemic

Originally published for Tribune. Every society with a concept of property also has a concept of theft. They are mutually constitutive, as Proudhon argued in 1840. To privatise public land is a form of theft; to alienate an object from its rightful owner is a form of theft; to plagiarise another person’s idea is a […]